The Turing Normalizing Machine. An experiment in machine learning & algorithmic prejudice

Posted in: Uncategorized

But look closer, and you’ll realize that there’s no reason to despair… continue

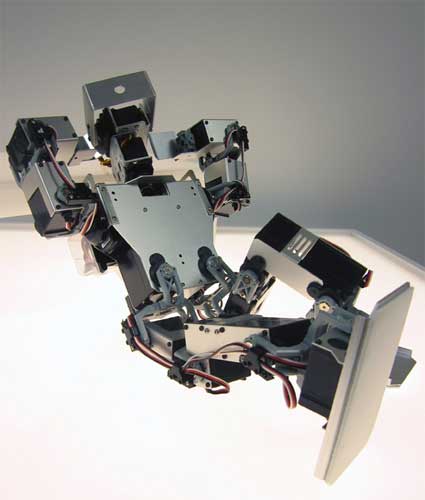

Fernando Orellana and Brendan Burns have collaborated on a new art work which investigates one of the possible human-robot relationships.

Using recorded brainwave activity and eye movements during REM (rapid eye movement) sleep to determine robot behaviors and head positions, “Sleep Waking” acts as a way to “play-back” dreams.

I asked Fernando to give us more details about the robot:

How does Sleep Waking work exactly?

I spent a night at The Albany Regional Sleep Disorder Center in Albany, NY. There they wired me up with a variety of sensors, recording everything from EEG to EKG to eye positioning data. We then took that data and interpreted it in two ways:

The eye position data we simply apply to the position the robot’s heads is looking. So if my eye was looking left, the robot looks left.

The use of the EEG data is a bit more complex. Running it through a machine learning algorithm, we identified several patterns from a sample of the data set (both REM and non-REM events). We then associated preprogrammed robot behaviors to these patterns. Using the patterns like filters, we process the entire data set, letting the robot act out each behavior as each pattern surfaces in the signal. Periods of high activity (REM) where associated with dynamic behaviors (flying, scared, etc.) and low activity with more subtle ones (gesturing, looking around, etc.). The “behaviors” the robot demonstrates are some of the actions I might do (along with everyone else) in a dream.

We also use robot vision for navigation and keeping the robot on its pedestal. This camera is mounted about three feet above the robot and it not shown in the documentation.

Video:

What do you think the robot can bring to our understanding of possible human-robot relationships?

Sleep Waking is a metaphor for a reality that could be in our future. In the piece we use a fair amount of artistic license. Though the eye positioning data is a literal interpretation, what we do with the EEG data is a bit more subjective. However, perhaps one day we will have the technology to literally allow a robot to act out what we do in our dreams. What could we learn from seeing our dreams played back for us? Will we save our dreams like we save our photographs?

Taking a wider view, robots are increasingly used to augment human experience. From robotic prosthetic devices, personalized web presences, and implanted RFID chips, technology is moving from being an externalized tool, to being a literal extension of who we are. By giving an example of and drawing attention to this process. We hope to give people the opportunity to think critically what personalized technology actually means.

Did you use an existing robot or did you build it from scratch?

We used a modified Kondo KHR-2HV humaniod robot. In the next iteration of this piece, we will be fabricating my own design for a humanoid robot.

Thanks Fernando!

See Sleep Waking at the BRAINWAVE: Common Senses exhibition which opens on February 16 at Exit Art.

Another of Fernando’s work, 8520 S.W.27th Pl. v.2, is still on view at the Emergentes exhibition at the LABoral center in Gijon, Spain until May 12, 2008.

One more from the work in progress exhibition at the Royal College of Art in London.

Tuur Van Balen, student of Design Interactions turned his attention to drinking water.

Water service companies add fluoride to the water to reduce tooth decay, in the UK, in the US and other countries. It has also been said that the army in some countries added bromide to drinking water in order to quell sexual arousal amongst male soldiers. Male fish in some area turn female because the oestrogen in birth-control pills ends up in the river.

My City = My Body is part of ongoing research into future biological interactions with the city and more precisely into how the increasing understanding of our DNA and the rise of bio-technologies will change the way we interact with each other and our environment.

The first chapter of this exploration of new biological interactions is dedicated to Thames Water, London’s largest ‘drinking water and wastewater service company’. Making use of the work-in-progress-show at RCA, he offered tap water (kindly provided by Thames Water) and asked visitors to donate a urine sample along with their postcode. He added the samples and postcodes to a map of London which contains biological information.

Because i liked his project a lot and wanted to make sure i wouldn’t write anything too silly about it, i asked Tuur to give us more details about his research. I’m passing the microphone to him:

The installation / intervention in the show is part of a bigger, ongoing project called My City = My Body. I’m interested in how cities are not as much made up by streets and buildings as they are made up by our behaviour and experiences. (The London of a design-student, cycling around from Shoreditch to South-Ken is totally different from the London of a banker in a black cab with his blackberry and a loft in Notting Hill) These experiences are heavily mediated by technology, just look at the way mobile communication networks totally reshaped our cities.

What I’m interested in, is how future technologies might influence our urban behaviour. We’re on the verge of a new area, an area that relies on the understanding of our body and the understanding of our DNA. What does this mean for the cities of tomorrow? Will we have DNA-surveillance and discrimination? Bio-identities and communities? …

The biological map in the interim show was an ‘intervention’ using the show as a platform to get feedback on these ideas. By gathering urine samples, I want to make people think about how their biological waste contains information. Pissing in public might become like leaving your digital data up for grabs, spitting in the streets like leaving your computer unprotected on the internet.

Unfortunately, I was not allowed to test the urine samples in the show. As England is the Mecca of Health&Safety, it was a struggle which took me up to Senior Management to even exhibit my project in this form (“Bio-hazard, sir!”) Continuing this research, I’m trying to contact a laboratory to look at bacteria, how bacteria could be a possible way of transforming our biological waste into information (inspired by Drew Endy‘s BioBricks project) and what the consequences in our everyday lives could be…

Thanks Tuur!

Images of the project courtesy of Tuur van Balen.